Spring-25 Semester Recap

The Spring 2025 semester has ended a while ago. And with that, the first two years of my PhD have passed in a blink of an eye. I am now a “PhD candidate” (formerly: “PhD student”). As usual, I find it’s important to review what I have learned after another academic term.

Outside LANding, a newly established esport center at UT Dallas

Research

There was a good piece of news last term: one of our manuscripts has been accepted to ACL 2025 (the world’s biggest NLP conference), which will be published in late July. It releases a dataset for holistically evaluating meme understanding abilities of any system.

- The process: This project started in October 2023, when our lab began to annotated the semantics of 950 memes from a dataset released in SemEval 2021. From a few sentences per meme (such as its background knowledge and final intent), the dataset evolved into one paragraph per meme, and eventually one set of multi-aspect multiple-choice questions per meme. By the end of this evolution, the annotations become deep — i.e., presenting intricate interpretations of all the nuances in the memes, and useful — they can directly be used to automatically benchmark model meme understanding capabilities by being multiple-choice questions.

- Manual and automation efforts: As simple as it sounds, the paper requires a lot of human labor. There are 16 co-authors of the paper, most of which contributing to the data collection stage. Automation was employed quite aggressively. At every annotation stage, I automated something in the procedure. For example, in generating questions, we used Adversarial Filtering with two competing models to auto-generate more confusing options for a quesiton.

- Submission: The paper was submitted 8 hours before the ARR deadline, which was a nice demonstration that I can to meet my advisor’s standard for submission without staying up until 6AM. The review process started with scores of 3, 3.5, and a 2 (over 5). We spent a lot of efforts in the rebuttal — running new experiments, measuring human performance, and doing more analysis. That resulted in a change from 3 to 4. The reviewer who gave a score of 2 was unresponsive, which was acknowledged by the meta-reviewer and eventually led to a good meta score of 3.5. I was a hard-won battle!

Fourteen Eighteen, a local coffee shop in Plano with laid-back vibe where I usually go to for work

While MemeQA is my first paper that gets accepted right in the first submission, another paper of mine (and others) has gone through a few submission cycles. So far, it does not have a sufficiently high score for publication in an A* conference. So we are planning to submit in to a A-level conference in the coming months.

- It is beneficial to try to understand what causes such a difference between MemeQA and the other paper. In fact, we were equally invested in both papers. The other paper, over a long period of time, accumulates even more human labor than MemeQA. I think one of the causes is about novelty. When my lab first worked on the paper, which was even before when I joined, the paper’s idea was novel. However, after almost 2 years, the idea becomes obsolete in such an ever-changing field of NLP. Despite many improvements added along the way, the idea in its core is no longer novel. Learning from this, future problems to tackle should be harder (but still viable). As Andrej Karpathy said in a blog post, “a 10x harder problem is at most 2-3x harder to achieve”. Given all the time I have in my PhD, I want to work on those 10x problems.

Last semester was also the first time I used closed-sourced models extensively in experiments. We spent a significant sum of money in OpenAI API (for GPT models) and Nebius AI (for over-sized open-source models). During the process, I observed that:

- Those expensive models are undeniably good — they consistently give good/correct answers novel questions. But it also makes me worried because I have exposed those novel questions to OpenAI — would they store the held-out questions to cheat future tests?

- Spending money on API calls isn’t that scary. 100 inference calls usually cost less than $1. But code verification must still be done thoroughly. I am a thorough (i.e., overthinking) programmer, so it is not a big deal though.

Lastly, as with any second-year PhD student, I did my Qualification Exam (QE). As my advisor put it, QE is a formal way for the PhD program to check if a student is making progress towards the dissertation. At UTD, QE has two formats, one of which requires the student to write a survey paper in a field of research and defend their arguments with a committee. Such a topic is expected to be the student’s field of research for the next few years.

- Rather unsurprisingly, my QE topic is on computational meme understanding (CMU). I enjoyed the that QE process, because it gave me plenty of time to dig deep into the major questions I have about this field: why it is worth to process memes automatically, whether there is a meaningful way to organize existing research in CMU, and what are the promising next steps for researchers (including me) to advance this field in the next 10 years.

- In the end, I have developed a framework to organize all tasks in meme processing. That framework helps make sense of all the things people are doing in the field and how advances in one direction can benefit the other. So far, all work in CMU that I have encountered can fit into that picture.

- Doing the QE was also the chance for me to just read. I selected about 15 most important papers in the field and read them in depth.

- Rathering depressingly, I observe a bad reading and citation culture in Computer Science research where people only read the abstracts before citing many papers in their introduction. It creates an illusion that the authors are knowledgeable for knowing so many papers. At the same time, it makes it hard for readers, even the experienced ones, to evaluate the merit of those references.

- My college roommate told me that, in philosophy, a paper only cites a handful of works. Furthermore, each citation is seen as a minus point because the authors have to depend on someelse work and is producing fewer novel ideas. Even though this is rather extreme, it is good to be aware of the papers we are citing so that every citation counts.

- My QE ended with a successful presentation.

- Before the presentation:

- Despite being given only 40 minutes of presentation, my slide deck got swollen into 90 slides. Unsuprisingly, I went way over time (20 minutes).

- I could only rehearse with half of the slides. Therefore, I was quite worried a few minutes before the presentation.

- But when it actually started, I was slowly getting in to a flow state and was comfortable to show off my knowledge. The committee liked the framework I propose, deeming my work as a “useful tutorial to the field”.

- Before the presentation:

- Aftermaths, I feel pretty good. Those are the feelings of being proficient in a research field, of holding more valuable knowledge in that topic than most of other researchers, and of some sort of academic pride. It feels as if I can teach a course on the topic. It gives me joy for continuing doing research.

- In that same day, I also aced the final exam in my AI course. On that night, I briefly wrote in my journal: “just a day where I passed my QE and aced my AI exam.” 🙂 I don’t often enjoy that much success in a day.

Coursework

I took two organized course last semester: AI and NLP. Overall, I still learned a lot.

The AI course by Haim Schweitzer was initially exciting, but gradually became boring:

- The main topics of the class include search, logical inference, Bayesian networks, and reinforcement learning.

- Search was the most exciting part.

- The professor has a significant part of his research in this area.

- Also, the main project he gave us is about building a game-playing agent for a two-player board game he came up with. Then, everyone is invited to have their algorithms compete in a tournament.

- The reward for the winner is a lot of bonus points in our final exam. But that is not the thing that made me excited. Rather, it is the problem itself. I spent almost my entire undergrad research time to analyze game-playing algorithms, with a paper publised in ICAPS. I remember simulating algorithms on synthetic games again and again to learn about their behaviors. Now, I have a change to apply those experience into an actual game, playing with actual people. There’s a thrill in that that synthetic games do not offer.

- Originally, the game appears to be a complex one that is hard to master by humans. However, it turns out to have a trivial solution that can be memorized easily by humans. But it actually took me a long while to realize that. During the process, I implemented both Alphabeta and Monte Carlo Tree Search as playing agents, giving them some weak heuristics. And then, seeing that the game only has roughly 30M symmetric states, I wrote a simple dynamic programming algorithm to solve the game altogether. Using the solved result to play with my friends, I realized the winning strategy is simple and clearly unbeatable. I could analytically prove that the game is a forced win for the first player as long as they long that simple strategy.

- The fact that the game is too easy for the first player makes the tournament no longer exciting… I was disappointed that all my time spent on solving the game did not turn into something tangible. But I did have a lot of fun. And I may write a blog post about the game and my solution to it.

- The later topics are rather a repetition of what I already know after so many times learning and being a TA about the topics. I can’t help wondering if a grad-level AI course at a top CS program would be more interesting. But I guess that was all I can ask for a course at UTD.

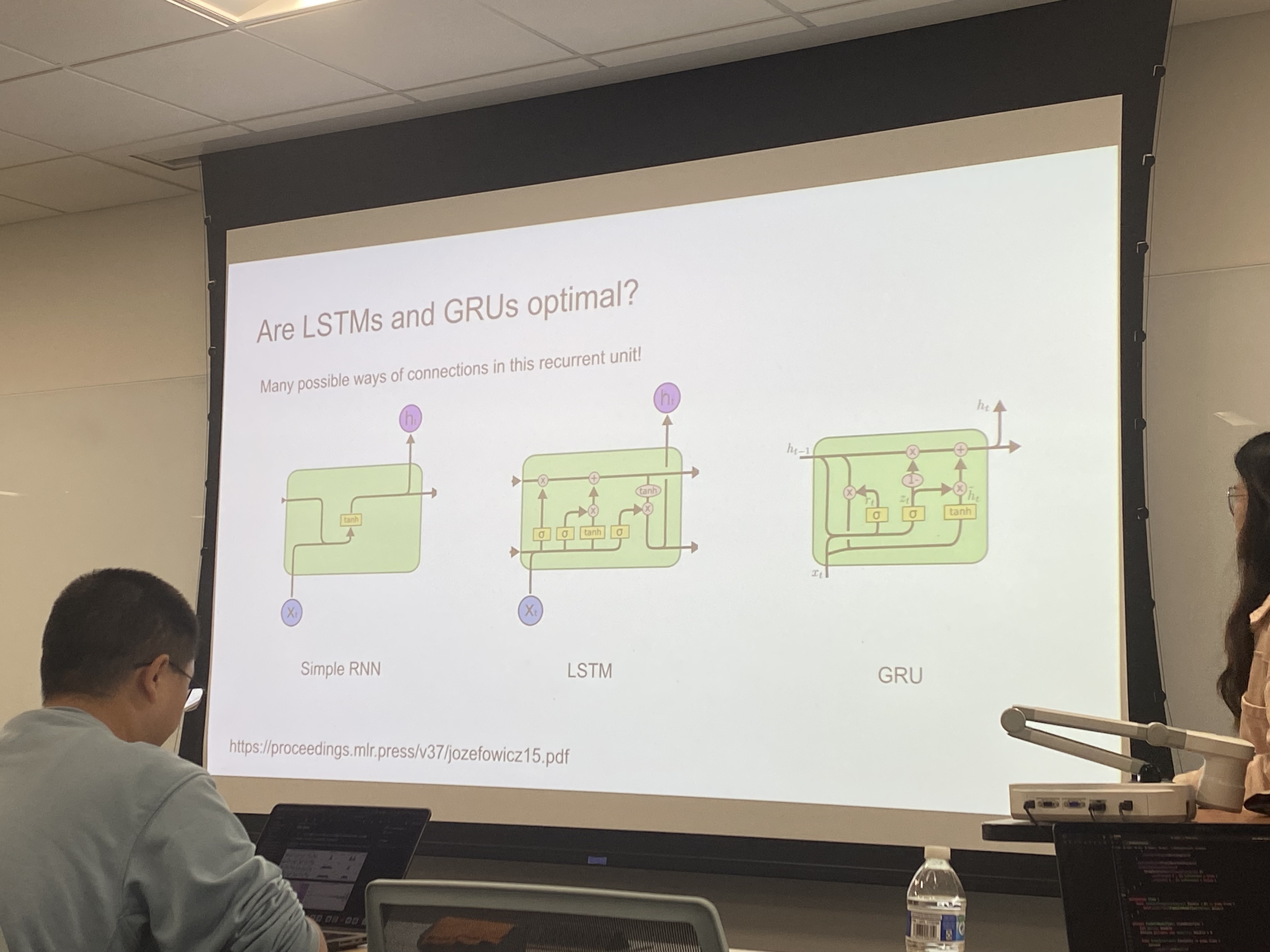

A lecture slide on LSTM and GRU, where we went for the first principle and ask if those architecture are already optimal

NLP by Zhiyu Zoey Chen was much more exciting:

- Working in NLP, I wanted to take this course since the beginning of my PhD. However, I had to delayed it due to various reasons. Perhaps it is because I have already had prior exposure to the field which already enables me do my research.

- My first exposure to modern NLP was via an REU with thay Tien Nguyen in Software Engineering. As a pioneer in applying AI into Software Engineering, he started working on language-oriented neural networks way before the release of ChatGPT. In winter 2021, he showed me the papers that introduced word2vec, transformer, BERT, and CodeBERT.

- Then, in summer 2022, I was reading Jurasky’s SLP3 while working on an NLP REU at NUS. So I already self-taught quite a bit of stuff in NLP.

- Zoey recently obtained her PhD from UCSB. As a new-generation researcher, she managed to keep updated with a lot of advances in modern NLP research. In her lectures, she talked about how X and ChatGPT helped her do that.

- Her lectures are full of insights and updated knowledge. The lecture series can be simply divided into two parts: traditional NLP and modern NLP with LLMs.

- In traditional NLP, she covered:

- language modeling with n-grams

- word embeddings – the sparse (TF-IDF, PPMI) and the dense (word2vec, GloVe)

- neural language models – FFNN for language modeling

- Part-of-Speech Tagging – using Hidden Markov Model, Maximum Entropy Markov Model, and Conditional Random Fields

- Constituency Parsing – the TreeBanks dataset, (P)CFG + CYK, neural constituency parsing

- Dependency Parsing – Arc-standard algorithm and neural dependency parser

- Meanwhile, in modern NLP, she covered the very exciting topics:

- RNN, LSTM, GRU

- The Seq2seq formulation with the first prominent success in Neural Machine Translation, attention mechanism, and pointer networks

- Transformers architecture – attention mechanism (v2), the transformer block, positional encoding, and the LM head

- LLM – encoder and decoder, pretraining and finetuning, and the model evolution (GPT, BERT, RoBERTa, AlBERT, DistillBERT, T5)

- LLM pretraining: KV cache, scaling laws

- LLM prompting: the anatomy of a prompt, few-shot prompting, Chain-of-thought, prompt tuning, ways to prompt

- LLM finetuning – including task tuning, instruction tuning, chat tuning, distillation, LoRA

- LLM inference: many decoding methods

- LLM RL: reward modeling, PPO

- In traditional NLP, she covered:

- Quite surprisingly, this class has no exams but only a big project where students have to do real NLP research. Somehow, a classmate found my contact, reached out to me to form a project group. We had a blast building a meme generation system. The result is to be shared, hopefully via a publication.

- At this point, I can now say I have had proper training in NLP. But funny enough, NLP is still so much more than what was covered in the class. The professor said that, given the current rate of progress in NLP, her lectures will become obsolete right in the next semester. After all, it is not only challenging to keep up with the advances but also exciting because there are so many open-access knowledge being produce everyday for us to use for life-improving applications.

Life?

One of the ways I have changed over the year in my blogging is that I feel less comfortable to talk about my personal life here. I shared my website with more people, including those to whom I am not ready to remove the social barrier yet. But I do want to stick to one of my original goals when starting the blog, which is to encourage discussions about emotions.

So, besides the things I study, here are some of the things I have experienced:

- I was having a ‘zen fever’. That is, I was so stunned by the magic of mindfulness practice and amazed by how it changed my perspective on life. I walked more slowly, ate more slowly, and went to a local meditation center regularly.

- It was also another lunar new year away from home. On that morning, I wore aodai for the first time. That aodai was bought by my mom before my departure to the US because she didn’t want me to be excluded from the events by the Vietnamese students at UTD. I wore it to a Vietnamese mall, hoping to join people celebrating Tet. Who would know that there was no celebration happening in the mall, and absolute no one wearing the traditional costume like me. I had a bowl of ‘bun rieu’ and a cup of ‘che’, then go home (on a bike).

- When not doing research, I had a lot of lone time going to cafe (to work), eating out, and or just sitting on public buses. I went to bus so much that I started to get acquainted to some drivers.

- I finally recovered from the injury last year to be back to Ultimate. This time I could go with the team to three tournaments: Dust Bowl (Tulsa), Centex (Austin), and Sectionals (Dallas). It was good to be back. I loved it when being shouted out by someone for doing something good on the field. I started to enjoy the game and its strategies more. However, I somehow still do not feel quite belong to this community. And perhaps, I would say bye to the team to put more time into research and sleep.

Shout-out circle of WOOF after winning the second place at North Texas College Sectionals

- And overall, I spent much less time with people.

- Jeongsik has left the lab. Shengjie doesn’t come very often. And my advisor seems to prefer virtual calls than in-person. So I see no point to come to the lab anymore.

- The Mixers organized by the department suck. Most of the time, the number of people in the audience is less than the number of people in the presenting lab.

- I miss the time at Fulbright, at NUS, and at home. Life was filled with heart-warming human interactions.

-

Socially, the only things I look forward to are (1) calls to home and (2) trips out of state to visit friends. So I travelled as much as I can.

- Right at the start of the summer, I travelled to Virginia to visit Huyen, my best friend who graduated from her Masters.

- Then we went to Boston as tourists. I set foot on MIT and Harvard – one is as impressive as I imagined, while the other is underwhelming. I also saw Nanette, who recommended me to grad schools 2 years ago

- Lastly, before my internship, I travelled to Minnesota to visit my relatives.

- During all this time, my beloved ones at home are also going through life, some of whome have their defining life events happening. My sister aced her college entrance exam and is graduating from high school. Mom and dad have gone through their 25th marriage anniversary. My long-distant girlfriend was having her key career moments at which I wish I could be there to offer support. And my whole country was having a historic celebration of 50 years after our reunification day, which was dubbed ‘the national concert’.

A bench in a middle of a nature reserve area, in the middle of the city. I stood there and looked at it.

Remarks

The last three months was not quite eventful for this blog. I had only got one blog post out in May and two in April. But that doesn’t mean I stopped learning. In fact, I did learn a lot during those days. I start to think more about life after grad school. But at the same time, I think more about life in grad school. Anyway, I should do a better job at capturing my lessons here. This is a little space I created for myself to capture my learning, and I would love to make use of it.

Now I am in the Capital District of New York, having my first internship here in this country. Life has been much more enjoyable up here with new research friends, more trees, work-life balance, and a little more money to spend.

I will be back with some retrospective posting about my previous travels and experiences. In the meantime, I hope you all have a great summer!